Visual Testing Storybook with Playwright

Overview

Running a successful design system requires more than just providing components and documentation. It requires trust, trust that the artefacts you're providing for others to use are well-tested so things don't break between releases. Semantic versioning acts as the contract between your library and your users, and holding up your end of the bargain that you don't introduce breaking changes on every release goes a long way toward building rapport and increasing adoption.

There are many different types of tests to consider to ensure you're covering all of your bases, such as unit tests, integration tests, manual tests, and so on, but the type of test that I've found to be most effective as a system scales are visual tests. Visual tests work by taking a screenshot of two separate states and comparing them for any differences. For example, if you've changed one of your components, the visual tests will flag the change by comparing the pixels in the before and after screenshots, highlighting the differences in the form of a heat map.

Enter Playwright and Storybook

You can implement this type of test in several ways; I've done most of them. Third-party tools, such as Percy or Chromatic, can handle this for you, but you may only need them sometimes, depending on how your team operates. Using a library such as Playwright or Puppeteer is usually sufficient. These libraries excel at browser-level automation and can do all the capturing and comparing you need to run these tests. Combined with Storybook, a tool for visually representing your components, you can quickly get a full visual test suite up and running in just a few steps for your component library.

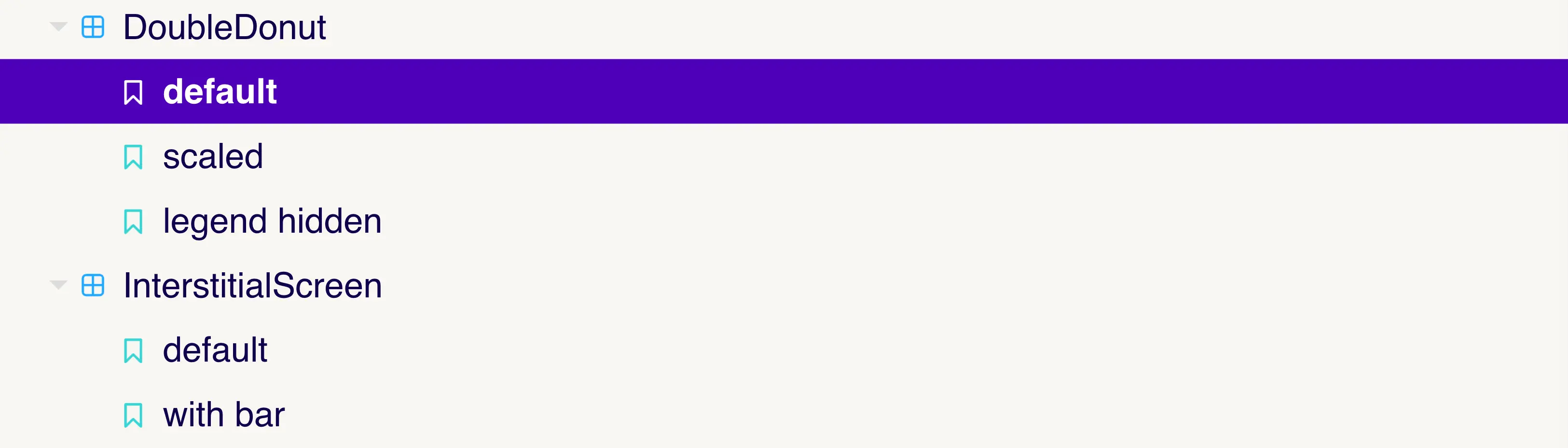

Defining Stories

The way you define Storybook stories will impact how your visual tests behave. Ultimately, we will be validating the before and after of each story, so ensuring that they are consistent in how you present the components is essential.

Here's a list of things I try to think about when it comes to Storybook:

- Component examples should be pure. There should be no external styles or headers above the components, just a pure representation of the component. They should represent what a user would see if using your component.

- One state per story. If your component has multiple states, try to represent them in their own stories. This will increase your test coverage as you are validating all possible permutations.

- Represent real-world examples. Fill your component samples with content and imagery representing how your users will interface with your components. This will give you the advantage of better-validating things like text wrapping and image sizes.

- Avoid dynamic content. Content that is randomized or animation-heavy makes visual testing harder. You'll have a much easier time if you keep things in a consistent state.

Creating the Test Suite

Storybook conveniently creates a manifest of your stories whenever it's built. This manifest comes as a JSON file called index.json. In this file, you'll see everything we need to create a series of tests, such as the story ID, any associated Storybook tags, etc.

{

"v": 4,

"entries": {

"example-my-element--counter": {

"id": "example-my-element--counter",

"kind": "Example/My Element",

"name": "Counter",

"tags": ["story", "visual"]

},

"example-my-element--overview": {

"id": "example-my-element--overview",

"kind": "Example/My Element",

"name": "Overview",

"tags": ["story", "visual"]

}

}

}

To create the actual tests, you'll first need to install Playwright into your project. After installing it, you'll need to make a couple of minor tweaks to your playwright.config.ts file to include an appropriate snapshot directory. Here, you can also modify the pixel difference threshold; however, in most cases, the default should suffice. I also recommend leveraging the HTML reporter, as it will provide you with an interface to view the results.

import { defineConfig } from "@playwright/test";

export default defineConfig({

snapshotDir: "./tests",

reporter: "html",

testDir: "./tests",

fullyParallel: true,

forbidOnly: !!process.env.CI,

retries: process.env.CI ? 2 : 0,

workers: process.env.CI ? 1 : undefined,

use: {

trace: "on-first-retry",

},

});

The actual test we create will need to do a couple of things:

- Loop over the

index.jsonfile, filter which stories should be tested, and define a test for each. - For each test, navigate to the component's "before" and "after" environments and capture a screenshot. For my examples, this will assume you have your upstream and current branches of Storybook deployed somewhere. We'll set these using the

UPSTREAM_STORYBOOK_URLandSTORYBOOK_URLenvironment variables. - Compare the two screenshots and report the status back to the test runner.

Filtering

It's important to acknowledge that these tests are both resource and time-intensive; the more your system scales, the more prolonged the test suite will take to run. Sometimes, I've spent more time determining how and when the suite should run than setting up the tests themselves. In some cases, you may want to limit how many stories get tested; either you want to prevent certain ones from being included because they don't represent a component, or you may want to make the suite opt-in to have more control over how many tests are included.

To achieve this, you can define a tags property when you set up stories. This allows you to define an arbitrary array of strings that determine which tests are run.

export default {

title: "Example/My Element",

argTypes: {

title: { control: "string" },

counter: { control: "number" },

text: { control: "string" },

},

tags: ["visual:check"],

};

You can then create a utility function that handles the filtering by checking a particular tag's presence or lack of presence, which we'll use in an upcoming step. I've also filtered based on the docs tag in my example. Storybook will add this tag to docs pages automatically, and as these aren't stories, we don't want to test them.

export const filterStories = (stories: Story[]): Story[] =>

stories.filter(

(story) =>

story.tags.includes("visual:check") && story.tags.includes("story")

);

As your testing strategy matures, it may be helpful to expand this utility to filter further based on other factors, such as what has changed in the git logs, perhaps even going as far as executing based on a generated dependency graph. Ultimately, when you run the suite, it should be a team decision so everyone fully understands when certain things are getting tested.

Navigating Storybook

Before we create our test suite, we'll want to abstract some repeated tasks, particularly navigating Storybook and ensuring our content has loaded before capturing a screenshot.

Our first function returns the URL for the story we want to screenshot. This includes the fully qualified URL and additional query parameters to ensure Storybook removes any additional UI irrelevant to our test, such as the add-on menu and the sidebars.

export function getStoryUrl(storybookUrl: string, id: string): string {

const params = new URLSearchParams({

id,

viewMode: 'story',

nav: '0',

});

return `${storybookUrl}/iframe.html?${params.toString()}`;

}

The second function accepts Playwrights page object, the story's ID, and the environment we want to capture. This function will call our other one to get the URL and validate that everything has loaded correctly so the next stage of our test can be set up to capture the screenshot.

export async function navigate(

page: Page,

storybookUrl: string,

id: string,

): Promise<void> {

try {

const url = getStoryUrl(storybookUrl, id);

await page.goto(url);

await page.waitForLoadState('networkidle');

await page.waitForSelector('#storybook-root');

} catch (error) {

// Handle error here in cases where the above times out due to 404's and other factors.

}

}

You'll need to consider error handling here. When both environments are equivalent, #storybook-root will be defined. However, if you introduce a new component, the upstream branch you're testing against will not be able to capture a before screenshot due to the not found error. You could skip the test here or capture a screenshot of the not found page; it's up to you.

Capturing the Differences

We're now ready to begin capturing screenshots and comparing image differences. The actual setup of these tests is relatively straightforward. Here, we define a new test for every item in the filtered array of stories. Before each test, we capture a screenshot of the upstream environment and platform and then do the same for the current. Afterwards, we used the toMatchSnapshot method that Playwright provides to make the visual checks for us.

import { test, expect } from "@playwright/test";

import { filterStories, navigate } from "../utils";

import manifest from "../storybook-static/index.json";

const options = {

fullPage: true,

animations: "disabled",

};

test.beforeEach(async ({ page }, meta) => {

/**

* Set the viewport size and other global level browser settings here.

* For example you may want to block certain resources to enhance test stability.

*/

await page.setViewportSize({ width: 1920, height: 1080 });

await navigate(page, process.env.UPSTREAM_STORYBOOK_URL, meta.title);

await page.screenshot({

path: `tests/visual.spec.ts-snapshots/${meta.title}-upstream-${process.platform}.png`,

options

});

});

const visualStories = filterStories(Object.values(manifest.entries));

visualStories.forEach((story) => {

test(story.id, async ({ page }, meta) => {

await navigate(page, process.env.STORYBOOK_URL, meta.title);

const upstreamScreenshot = `${meta.title}-upstream-${process.platform}.png`;

const screenshot = await page.screenshot({

path: `tests/${meta.title}-current-${process.platform}.png`,

options

});

expect(screenshot).toMatchSnapshot(upstreamScreenshot);

});

});

If you have the HTML test reporter configured, you can run npx playwright show-report to view the results once you've run your tests. Here, you can view the results and compare the differences side-by-side, along with the option to view the highlighted pixel differences.

As mentioned earlier, how and when you run these tests is up to you. In a CICD environment such as GitHub Actions or CircleCI, you may, however, find it helpful to notify the pull request when a visual change is detected by failing the job and then notifying the pull request via a comment that a failure occurred with a link to the generated report. I like to make the visual test suite a non-merge blocking job so intended visual changes can proceed, allowing the upstream environment to be updated once everything has been given the green light by the reviewers. Several excellent options exist for working with the test results, including a JSON reporter.

Conclusion

Visual testing is complex. You'll likely need to make several changes along the way, both to how your test suite is set up and how you interface and react to its results. It requires discipline from your team and a lot of automation to ensure that the flagged results are adequately checked and respected. If you can overcome the initial hurdles, this type of testing can be a potent tool in your development cycle arsenal.

Related Reading

Want to learn more about Design Systems? Check out some of my other articles below.